I know this is one of the favorite tropes of conspiracy people, but I suspect my amazing account on TikTok was shadowbanned on account of (pseudo) conspiracy content.

The thing about pseudo-conspiracy content, of course, is that it is by and large indistinguishable to the naked eye (or the algorithmic eye) from “real” conspiracy content. Commentary and satire also get thrown onto the ash heap of history, without regard for fundamental differences.

The thing that’s forever tantalizing about the concept of shadowbanning, is that it is all but impossible to find “proof” that it is occurring, especially with the poor quality stats platforms generally give to users.

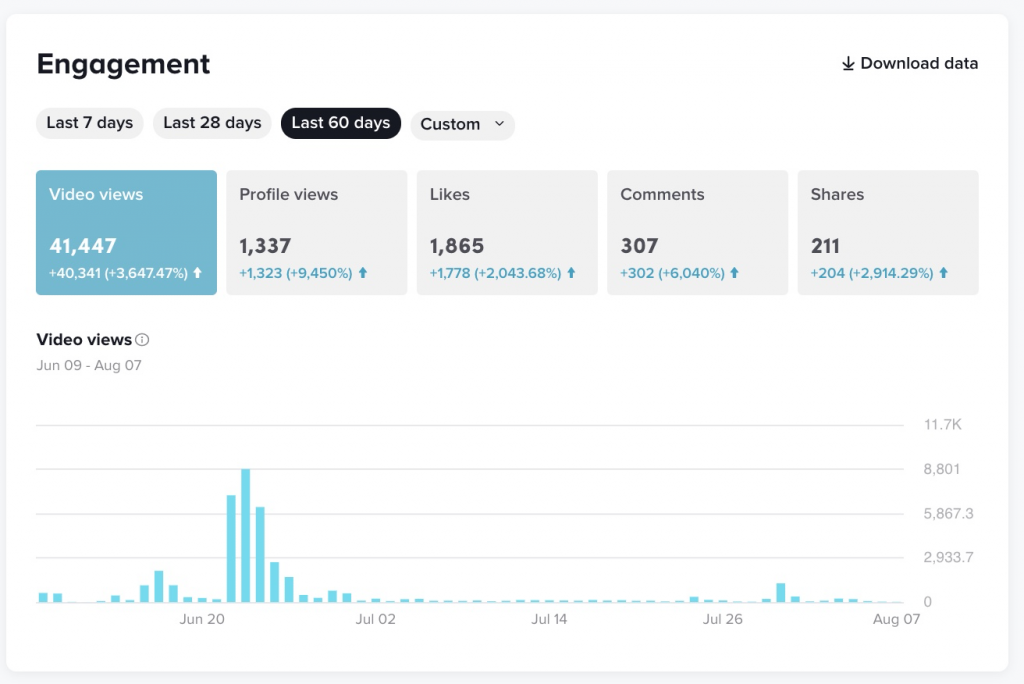

For illustrative purposes, here is the past 60 days of engagement:

Embeds on TikTok don’t tend to play nicely with WordPress, but here is the YouTube version of the video that caused the traffic spike a little after June 20, 2021 listed above:

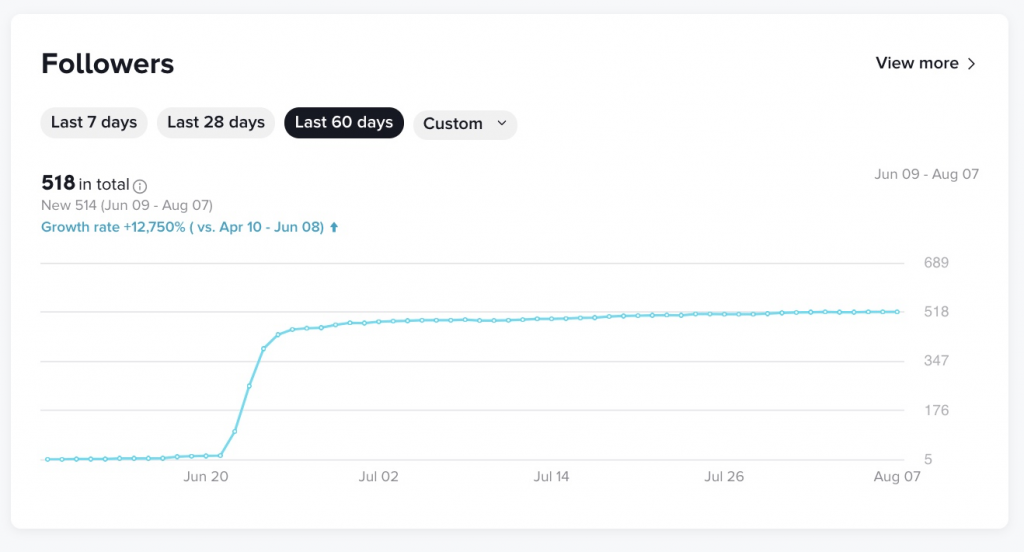

I had early success with this account by playing on Mandela Effect stuff, which is by and large harmless. After the success of the above, and a few follow-ups, I ended up leaning more into the conspiracy direction. Here is the correlating time period’s increase in followers:

You can see the followers jumped dramatically around the same time period as the video above was posted, and then basically plateaued. But, with such a sudden and dramatic increase in followers, one would theoretically *expect* that any content posted after that bump would automatically get more traffic than content posted prior to it, purely based on distribution to followers.

But if you look at the traffic graph, that is not the case.

One thing I’ve learned working for platforms, however, is that algorithms are inscrutable, even to those who develop and maintain them. The fact of the matter may very well be that there is no explanation. Or if there is, it would just be based on a “best guess” by an engineer, and that’s about as far as it could be taken.

Users of platforms, however, like to believe in the fiction that everything behind the scenes is perfectly and intentionally designed to act a certain way. While that may be the case in terms of broad strokes, it is rarely the case when applied to a specific set of detailed examples. We might be able to approximately match the overall system design when examining a single example, but as I said, it’s rare you can perfectly suss out what is going on. At least in my years of experience in the matter.

That doesn’t stop platform users from 1) theorizing, and 2) assuming that they are being targeted, and 3) assuming targeting is happening because of their political beliefs.

Here’s an interesting example I noticed while toying with pseudo-conspiracy content on TikTok:

This is a search results page for the somewhat vanilla term “cabal” on TikTok (above). The included text reads:

No results found

This phrase may be associated with behavior or content that violates our guidelines. Promoting a safe and positive experience is TikTok’s top priority. For more information, we invite you to review our Community Guidelines.

If you poke around on Google, you can still pull up some tiktok.com results using the word “cabal,” but you can’t do it natively in TikTok’s search (at least on web, assuming app is the same). Here’s why, according to BBC, July 2020:

TikTok has blocked a number of hashtags related to the QAnon conspiracy theory from appearing in search results, amid concern about misinformation, the BBC has learned…

“QAnon” and related hashtags, such as “Out of Shadows”, ”Fall Cabal” and “QAnonTruth”, will no longer return search results on TikTok – although videos using the same tags will remain on the platform.

Now, my usage of #cabal was imitative of QAnon conspiracies, but I intentionally never linked my account to that overall cesspool of content, to which I am personally vehemently opposed.

The word cabal itself is, of course, a neutral and perfectly valid English word:

noun

1. a small group of secret plotters, as against a government or person in authority.

2. the plots and schemes of such a group; intrigue.

3. a clique, as in artistic, literary, or theatrical circles.

There’s even an overtly non-conspiratorial definition of that word, as you can see. And the etymology of the term is even more interesting:

cabal (n.)

1520s, “mystical interpretation of the Old Testament,” later “an intriguing society, a small group meeting privately” (1660s), from French cabal, which had both senses, from Medieval Latin cabbala (see cabbala). Popularized in English 1673 as an acronym for five intriguing ministers of Charles II (Clifford, Arlington, Buckingham, Ashley, and Lauderdale), which gave the word its sinister connotations.

And since that definition links to this one, including for reference:

cabbala (n.)

“Jewish mystic philosophy,” 1520s, also quabbalah, etc., from Medieval Latin cabbala, from Mishnaic Hebrew qabbalah “reception, received lore, tradition,” especially “tradition of mystical interpretation of the Old Testament,” from qibbel “to receive, admit, accept.” Compare Arabic qabala “he received, accepted.” Hence “any secret or esoteric science.” Related: Cabbalist.

So, because of a few bad actors, a term with many layers of rich historical significance can just be disappeared from a platform.

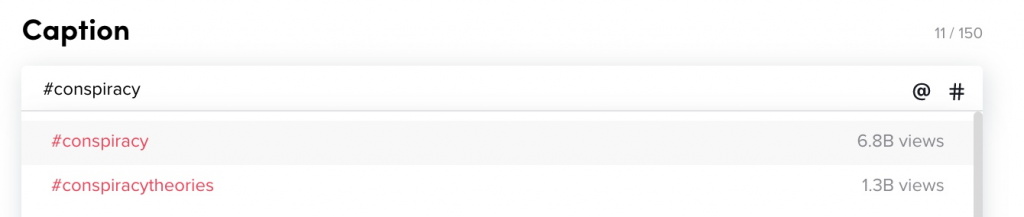

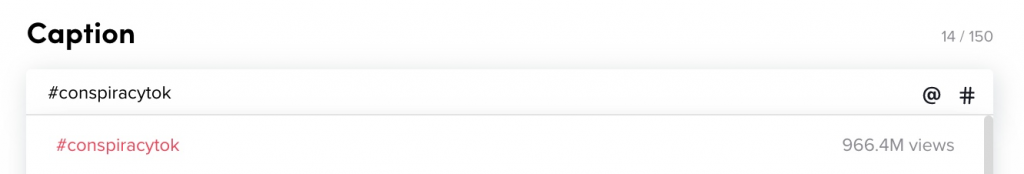

And yet, there’s no issue using other phrases related to conspiracy in general, and they have literally BILLIONS of views:

Whereas, if you type in #cabal (or #qanon), you are not presented with the dropdown to select the “official” tag, and are not told any tally of existing views.

What I take issue with here is not the banning of QAnon related content. I support that, and god only knows how much of it I myself banned while in a related position to do so. What I take issue with instead is the heavy-handedness, inconsistency, and reactiveness of platforms in removing this content.

If they wanted to really make a difference, they should have all done it across the board at least a couple years earlier. It was always clear what was happening, and always clear that it was dangerous. The only thing that changed, as far as I could tell, is that news outlets eventually caught wind of it, and started reporting on it, and challenging platforms to remove it with the threat of public embarrassment.

As the BBC article linked above states:

“TikTok said it moved to restrict “QAnonTruth” searches after a question from the BBC’s anti-disinformation unit, which noticed a spike in conspiracy videos using the tag. The company expressed concern that such misinformation could harm users and the general public.”

As also quoted above though, TikTok apparently did not remove the majority of that content. They simply made it harder for the average user to find. But only in that one narrow instance.

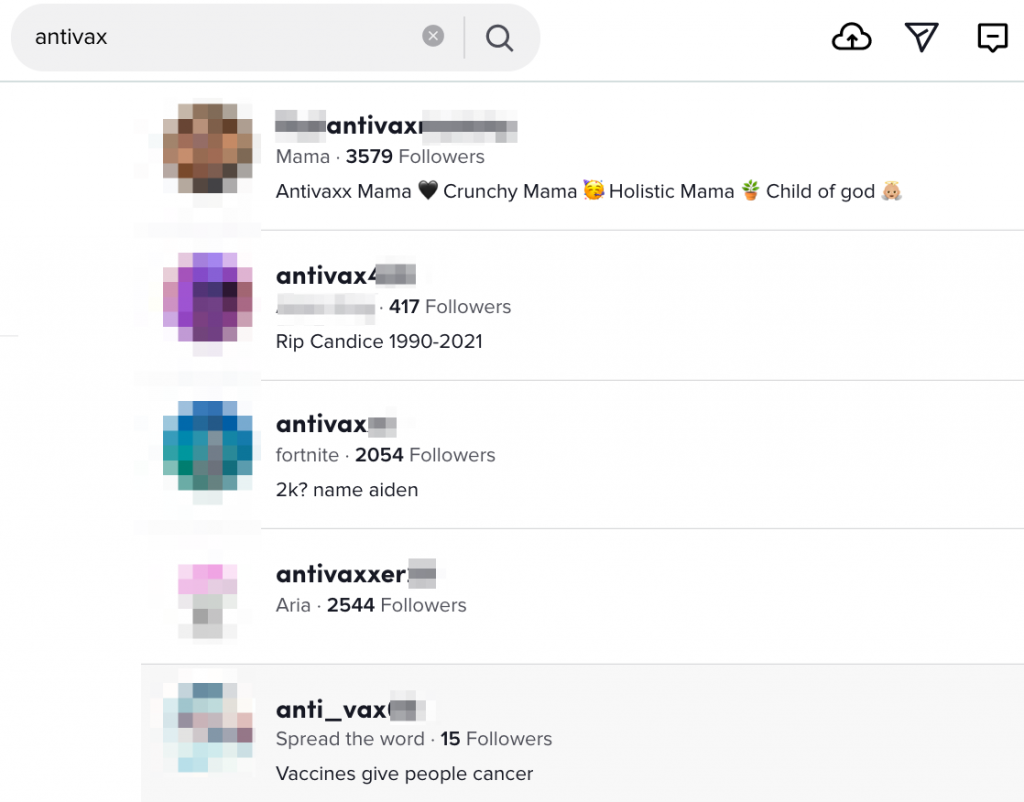

By contrast, it’s still easy to find dozens if not hundreds of “antivax” accounts, no problem. Even if that content “could harm users and the general public.” There are tons and tons of those accounts which remain active and searchable:

Now, you can choose to do personally whatever dumb thing you want with regards to the COVID vaccines, or vaccines in general. My point in illustrating this is that there is an obvious and known public harm, and yet little to nothing is done in this instance. And the cause is almost definitely because they have not (yet) been embarrassed by the BBC’s “anti-disinformation” team.

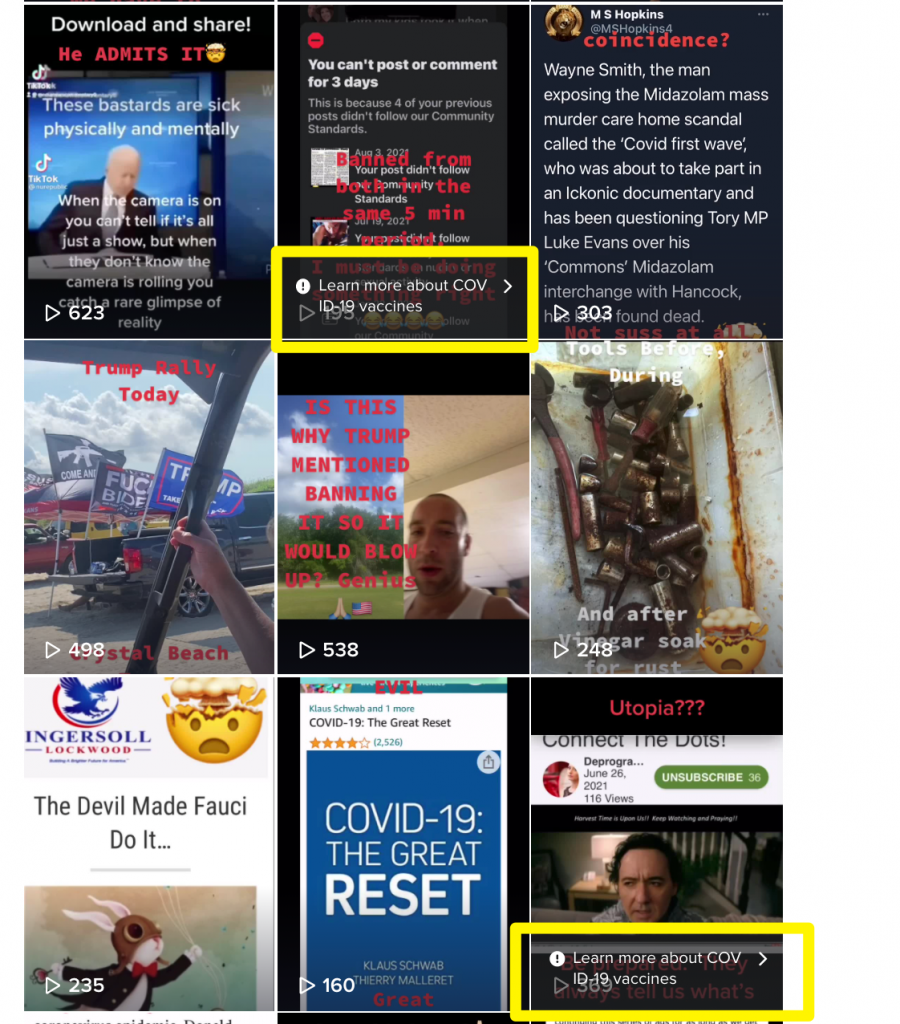

It’s worth noting, however, that they do apply a TINY label on videos which they (apparently) detect as being related to COVID misinfo (see the yellow boxes I added below to highlight the label):

Does any person in their right mind think this little tiny warning stops conspiracy people from conspiracizing? Get real. It’s a joke. Here’s how it looks on a video details page on web:

As you can see by this person’s video content in the screenshot above, when you ban or remove conspiracy content, what this signals to the conspiracy person producing or sharing that content is that they are “on the right track.” Because it’s clear to them the platforms are owned by or in cahoots with “the cabal.” (or else why would that word itself be forbidden on the platform?)

No amount of fact checking, interstitial labels, or burying things from search results is going to disabuse those people of those notions. It’s just not going to work. Like ever. I’m not being hyperbolic. I’ve seen this play out in the wild thousands of times over the course of 5+ years. The pattern is always the same. “We” are not winning.

So what should platforms do? Just not police their content? Let anything go? Hardly. They should “do their best,” to maintain the service that they own and pay for in roughly the shape that they determine to be the right one. But they should do it with the knowledge that the measures they take to suppress things which do not correlate to the shape they desire do not necessarily result in positive outcomes, or solve fundamental societal problems which are at the root of these online behaviors.

I know no one wants to hear this. But there is no simple fix. Platforms are broken because society is broken. Truth is broken and devalued because Hyperreality is simply more engaging. If we want to have conversations with people that result in meaningful changes on these issues, we’re simply going to have to find new and more creative ways to do it, because this present set of approaches is not working.